Prophets on the SILK road…

When prophecies finally come to pass, what comes next?…..

Back in the late ‘90’s and early 2000 Tim Berners-Lee and a group of folks at the W3C captured the world’s attention with predictions that web content would go from purely unstructured data to useful content based on query-able conceptual structures…the Semantic Web. Soon after, a raft of standards (RDF, OWL, etc.) was introduced with the notion that uniform subscription to such standards would usher in the next golden age of the Internet. Sound familiar?

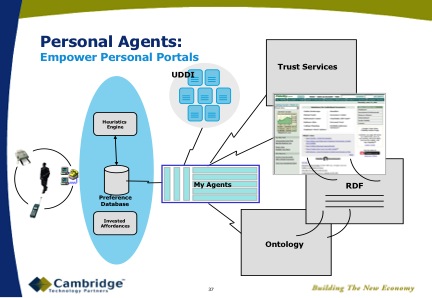

At the time, enthusiasm for this vision was such that it spurred a number of thought leaders, myself included, to predict how and when such an innovation would make its appearance as a practical instance of useful technology. My bet at the time was the creation of personal agents employing a number of standards-based, service-oriented components that would scour the web in search of answers to personal needs and inquiries. The slide below was first used in May of 2001 in conversations with customers and prospects of Cambridge Technology Partners.

A couple of things worth noting, this slide includes heuristic engines, along with multiple agents and ontological syntax used in conjunction with RDF tagging embedded in published web content. Throw in voice recognition and this architecture, slightly reconfigured, is now the basis of Apple’s Siri agent. Unfortunately, at the time that these predictions were made, no one fully appreciated the enormous complexity of effort required to augment existing web content to allow the useful and ubiquitous adoption of semantic frameworks.

Like it or loathe it, Apple’s Siri has upped the sakes dramatically and may prove to be the catalyst that ignites the semantic revolution predicted long ago. But not necessarily for the reasons that are most immediate and most obvious.

The Arduity of the SILK Road

For those who have followed the development of the semantic web the SILK acronym is probably a familiar one. One of the most familiar instances of the acronym stands for Semantic Inferencing of Large Knowledge which is in turn both a project and a computer language developed under the auspices of Project Halo which is a sub-project contained within Paul Allen’s Vulcan Incorporated, a massive conglomerate that contains technology, real estate, media and sports ventures and properties. Another instance would be for the SILK Application, a semantic startup based in Amsterdam that briefly grabbed the spot light back in May for the beta release of its portal designed to convert and host web sites based on generalized semantic concepts.

At bottom, both of these initiatives as well as dozens of others, have tried to take the complexity of tagging and relating unstructured content in a way that allows for computer based manipulation of relate-able concepts. So for instance, an event can be associated with a date that in turn can be associated with a location in such a way as to allow a person to structure a query such as “find me a jazz show on October 25th within 10 miles of my current location” that can be executed over the web without the involvement of all the resources in MIT’s Media Lab. Turns out it’s not as simple as it seems.

Up until now, most semantic ventures have focused on taking the cost and complexity out of delivering usable semantic content to the web in the hopes that the entities whose content was consumed in this fashion would enjoy enormous competitive benefits. Essentially, these firms wanted to be the arms merchants to the next large battle on the web. Thanks in part to the proliferation and fragmentation of standards things have not turned out that way and successful instances such as Siri still largely depend upon a closed architecture and a detailed articulation of conceptual relationships between unstructured data that is largely domain specific. In others words, it is still too hard to get a single, common semantic framework to facilely operate over disparate domains of specific concepts and content. Understanding the theater is not like understanding the weather, which is not like understanding scuba diving, which is not like understanding skydiving. Context and domain specific knowledge are still driving the bus.

However, one thing is becoming clear and that is what is at stake when it comes to the monetary value of a successful semantic offering. When Tim Berners-Lee first conceived of the Semantic Web it was difficult to imagine exactly what the value of the technology might be. Google was still in its infancy and other search technologies like Alta Vista were in various stages of ascent or decline. Late entrants such Bing weren’t even on the drawing board. At this point there is a fairly well established notion of what the value for semantic offerings might be and how it might reshape the major players who seek to dominate the on-ramp to the world wide web.

A closer look a Siri exposes the reality of the current state of semantic based computing and the effort required to make it a useful. For all of its vaunted raves and failings, Siri is truly an impressive piece of technology and one that could only have been cobbled together in the last couple of years. It relies on numerous components that are made up of both self-contained and web based services. And at the heart of its design is a concept and technology known as Active Ontologies.

Source: Apple Patent Documentation

The Siri concept sprang from several projects having roots that in some instances go back as far as 1987, such the SOAR cognitive architecture that is still being worked on at the University of Michigan. However, more recent projects such as DARPA’s PAL (Perceptive Assistant that Learns) and CALO (Cognitive Assistant that Learns and Organizes) are given credit as the immediate antecedents to SRI’s Siri technology that Apple acquired and embedded in its smart phone. At this point, it isn’t exactly clear what technology was actually browed or licensed from any of these projects and there is some speculation that a significant portion of the intellectual property stems from a clean sheet re-write of another project dubbed RADAR which was also funded by DARPA but has been jointly led by SRI and Carnegie Mellon University.

Leaving aside the ancillary components and services, and there are many, the beating heart of Siri is its Active Ontology engines. Active Ontologies are unique in that they eschew the tradition view of knowledge representation as static conceptual hierarchies or data augmented through the use of triples a la RDF and replace them with rule based processing elements that represent domain specific objects imbuing each object with a set of behaviors that respond differently depending upon the input they are provided to act upon.

Source: Modeling Human-Agent Interaction with Active Ontologies

This architecture specifically address one of the key difficulties that has thus far hindered the adoption of the semantic web, that being the application of a single, comprehensive, common generic capability suitable to manipulate the conceptual frameworks of diverse knowledge domains. In short, what SRI figured out is that when it comes to understanding embedded conceptual frameworks, one size does not fit all.

Source: Apple Patent Documentation

Thus, if you peel things back one more layer, you’ll find that each subject or category the user engages with Siri requires its own specific Active Ontology or the answers you get back won’t necessarily make sense. This is also why Siri can do some things very well and other things not-so-well or at least not to the satisfaction of all users. When Apple released the Siri utility it did so with a limited number of domains in mind (restaurants, entertainment, weather) and those domains are often most useful when used in conjunction with the user’s location, as location, or in this instance proximity, often provides the primary context with which an agent can resolve a specific request within a specific domain.

In the scheme of things building domain specific ontology engines isn’t necessarily a low cost approach to promote the practical adoption of semantic based technology. However, it is likely that this doesn’t matter as proximity, not cost, is about to make the semantic web an enormous game changer.

All prophecies are local…

Readers of this blog are probably aware that Apple has recently feigned interest in a custom version of Google Now as a possible addition to its smart phone. The likelihood of this happening is somewhere around the chances of you and I simultaneous winning the Lotto. Google Now is Google’s version of Siri based on what it refers to as its Knowledge Graph, or semantic search capability. There are obviously many reasons for Apple to keep to a proprietary semantic component for its smart phone but there is one that stands out above the rest. Folks that follow these types of things have determined that a significant share of search activity is local and, thanks to smart phones, the fastest growing segment of local search is mobile. Reports recently released by Chitika Insights also reveal one more interesting data point, in the race for mobile search, Bing is a distant third and likely losing share by the minute.

Source: Chitika Insights

The good news for Microsoft, such as it is, is that it still maintains a healthy share of local search from desktop devices. This alone suggests the next frontier in semantic search will likely be on the desktop.

The reason this is so important is that the stakes here are enormous for any player that seeks to control user access to the Internet and the subsequent revenues that accrue to the players who occupy that enviable position. A recent forecast by eMarketer estimated that search revenues in 2012 will be approximately $17B, $14.4B in the US alone. Consequently, a single percentage point of search share is worth $170m.

If you assume for a minute that Apple’s mobile share is roughly equivalent to Google’s current mobile share we are talking about a revenue opportunity of approximately $650m. Not in itself a huge number but one that is growing everyday.

Source: ComScore

This also points out what must be a few very uncomfortable prognostications for the current players in search. If between 24-28% of all search is local and a growing percentage of that is mobile and nearly all of that will soon be semantic where does that leave search players who currently don’t have a proprietary mobile platform?

My guess is that most if not all of the share enjoyed by Microsoft, Yahoo, Ask and AOL is currently or will soon be in play. That’s 33.4% of the market or $5.6B in potential revenue with the big losers here being Microsoft and Yahoo who with neither a proprietary mobile platform or any semantic search capabilities are significantly disadvantage. You can expect Microsoft to remedy this through an acquisition; probably Nokia but at this point just about anybody would do. Yahoo on the other hand bereft of any mobile platform, let alone any proprietary search capabilities, is probably toast.

Timing trumps technology every time….

Few of any generation, let one those born after the advent of the Internet, recognize the name of Philo T. Farnsworth. Yet in 1927 he perfected and applied for a patent for a device that would truly and profoundly reshape the world…the television. Farnsworth’s invention, as momentous and monumental as it was didn’t become widely adopted until more than 20 years later. Why? Well, there were a number of circumstances and prerequisites that stood in its way: a depression, a world war, not to mention the fact that most of the developed world had yet to become electrified. The Tennessee Valley Authority whose mission was in part to distribute electricity to rural parts of Appalachia wasn’t even chartered until 1933.

The adoption of any innovation requires more than technology. Frequently it is a matter of having a number of economic, cultural and regulatory circumstances evolve. In the case of semantic technology it seems to have also included the development of adjacent technologies and markets, compliments actually, that have finally propelled it to where everyone thought it might be 10 years ago.

But now that it’s here you can bet it’s going rearrange some checkers on the broad.

Graphic courtesy of FreeDigitalPhotos.net