AI’s Inconvenient Truth

In a recently published piece, “Layoffs at Watson Health Reveal IBM’s Problem with AI”, Eliza Strickland writing in IEEE Spectrum laid bare the lack of finery clothing nearly all AI revenue forecasts.

It seems that when it comes to Watson, IBM’s problem stems from its inability to effectively and efficiently monetize the technology. And guess what? It’s doubtful that IBM is alone, being a leader in this space, its just had more time to realize the obvious.

Leaving aside for the moment that Watson Health is an amalgam of several acquired shops and that acquisitions are often subject to refinement through winnowing staff, something about the expectations of AI’s prospects, in general, is beginning to seem off kilter.

It could well be that IBM is merely the canary in AI’s deepening coal mine. (see The Next Cambrian Explosion – February 2016)

For instance, Gartner just asserted that AI will grow business value by 70% in 2018 alone to an astonishing $1.2 trillion. Of course, business value isn’t necessarily revenue, but it might be, just as it might be profits or costs or good will or Market Value Added or just about anything you want it to be. Pretty convenient, no? A more squishy metric it could not be. And in releasing this ephemeral insight John-David Lovelock, research vice president at Gartner said “One of the biggest aggregate sources for AI-enhanced products and services acquired by enterprises between 2017 and 2022 will be niche solutions that address one need very well. Business executives will drive investment in these products, sourced from thousands of narrowly focused, specialist suppliers with specific AI-enhanced applications.” Apart from the fact that integrating solutions from thousands of narrowly focused suppliers doesn’t sound cheap or easy, the rest of it reads like Watson Health to me.

What gives?

Well, for starters, the entire AI industry is operating under a bogus representation of what it is and what it does.

First, it isn’t artificial. Rather, it is machine rendered facsimiles of associable human artifacts; numbers, letters, words, symbols, sounds, objects, data, etc that have specific significance to humans. And next, it isn’t intelligence. It is the explicit recognition of those artifacts for purposes of machine based abstraction, articulation and manipulation. If you start with the following rough AI taxonomy what you discover is that, with the exception of Planning and Robotics, the entire universe of AI can be summed up as machine based recognition and manipulation of specific classes of associable human artifacts.

Source: Ajinkya Kulkarni, Hackernoon

Once you get past this very basic representation, other issues become more readily apparent.

For instance, the common assertion in AI Land is that bigger data generates bigger insights. This stems in part from the methods employed to acquire and create machine generated facsimiles, aka Machine Learning (ML). Inserting and back-proping a couple million pictures of a hot dog might get ML to “recognize” the image of a hot dog the next time it sees one. But it also might not (see AI: “What’s Reality But a Collective Hunch?” – November 2017).

Next, to produce higher level insights of broad or specific economic consequence one would have to capture, marshall, render, scrutinize and analyze veritable yottabytes of data (see Platform Strategies in the Age of AI – August 2016) From raw data and symbols all the way up to, through and including conceptual ontologies. So what would the power law look like that generates a “recognizable”, monetizable healthcare insight? A billion billion data points from numerous discrete knowledge domains analogously concatenated and parked in some infinitesimal flat tail that leads to one potential eureka? (see Innovation and Analogy: One of These Things is a Lot Like Another – August 2017).

And suddenly the economics of our current generation of AI begin to emerge. Achieving valuable insights from limitless data may prove harder than mining bitcoin, with a whole lot less to show for the effort.

One can hardly fault IBM for struggling here. Their own expectations for “cognitive computing” have been far more modest than your typical AI fan boy and they haven’t hesitated to say so. We are still at a very early, almost metaphysical, phase of this phenomenon (see below). The techniques currently employed are crudely laying the foundation, utilities and primitives, for higher levels of abstraction which will come later. (see AI: What’s Reality But a Collective Hunch? – November 2017)

Sure, soon you will be talking to your refrigerator but that will pale in comparison to what lies over the horizon in the next three to five years. What’s needed for the present is more measured expectations and a lot less irrational exuberance about the near term economic impact of AI.

Or should I say, machine based rendering of associable human artifacts?

Who Put the “Cog” in Cog-ni-tive

Recently, noted investor and AI proponent Kai-Fu Lee reminded an audience that there has only been a single substantial breakthrough in AI’s 70 year history and that is related to Machine Learning (ML) or, tossing a couple of more layers of nodes and some backprop into it, Deep Learning (DL). With very few exceptions, the majority of today’s AI applications are predicated on this technique and everyone who’s anyone in the world of AI would acknowledge it. However, for some reason, all of the pundits responsible for predicting AI’s future economic impact, haven’t been able to quite get their heads around exactly what this means.

Lost in AI’s new Chautauqua is the role that cognitive, not computer, science has had in its creation. But it was cognitive science, the search for how human knowledge and perceptions are acquired, that was at the heart of the foundation of ML and DL. One of the main branches of cognitive science is dedicated to linguistics and understanding how the mind ingests basic text and converts it into words and ideas that can be subsequently infused with meaning and manipulated with logic. In fact, a good deal of academic cognitive research has been devoted to the simple act of reading.

Two of the leading academics in this field are James McClelland and David Rumelhart, back in the 70’s and 80’s, they were kind of the Simon and Garfunkel of cognitive science, rock stars in their own right, and they were some of the first to imagine and articulate how the mind might go about consuming symbols and converting them into recognizable concepts and objects. Much of their thinking on this topic was published in the form of “models”, graphic depictions of the process they envisioned taking place.

Source: James McClelland, Models, 1979

Back in the 70’s James McClelland envisioned a process whereby rudimentary marks could be ingested and subsequently rendered into recognizable text. His model of this process begins with an empty field of vision that through the mechanics of sight fill with the marks and symbols of text that can ultimately be discriminated. Judea Pearl, in his newly released “The Book of Why”, picks up where McClelland’s model leaves off and provides an elegant explication of how a structured conversation between conditional probabilities and likelihood ratios can create a stochastic convergence that turns marks into symbols, symbols into letters, letters into words and words into recognizable objects. (see AI: “What’s Reality But a Collective Hunch?” – November 2017)

Source: David Rumelhart, courtesy of Judea Pearl and Dana Mackenzie, “The Book of Why”

David Rumelhart, who helped popularize semantic weighting or backwards propagation, suggested that symbolic recognition could be extended from raw text into conceptual and ontological representations suitable for deeper logical manipulation. Unfortunately, Rumelhart couldn’t confirm many of his notions concerning backwards propagation because, when he came up with them, computers didn’t posses the horsepower to provide the necessary proof. That came later and in 2012 Geoffrey Hinton and his colleagues employed the technique to demonstrate a significant improvement in machine based object recognition, today’s one and only AI technological breakthrough.

Source: Geoffrey Hinton, an early model of human consciousness,”Parallel Distributed Processing: Explorations in the Microstructure of Cognition”, James McClelland and David Rumelhart editors

If you’d like to glimpse the genesis of today’s AI get ahold of a copy of “Parallel Distributed Processing: Explorations in the Microstructure of Cognition”. But, if you do, don’t lose sight of exactly where AI, or machine rendered object recognition, stands today.

To put today’s AI into a broader, more circumspect perspective one would have to plot the larger potential of addressable problems and their potential solutions. Fortunately, Francesco Corea and some folks at Axilo have attempted to do just that.

AI Knowledge Map

Source: Francesco Corea and Axilo, “AI Knowledge Map: How to Classify AI Technologies”, courtesy of Forbes

Source: Francesco Corea and Axilo, “AI Knowledge Map: How to Classify AI Technologies”, courtesy of Forbes

In the bargain they have pointed out that today’s AI fits into a potentially very powerful, yet very small portion of the overall AI landscape, the one made up of the intersection of “Perception” and “Probabilistic Methods”. I would contend that their placement of Probabilistic Programming and Neural Networks, in the Domains of Reasoning and Knowledge is premature as neither of these techniques have been successfully proven in those domains but are extensively used in the Perception domain. Judea Pearl essentially makes this same argument, suggesting that without a provable technique that can faithfully establish the causation of independent phenomena, we are likely to be “stuck” with our current AI breakthrough indefinitely.

There’s no doubt that the economic consequences of machine rendered perception of associable human artifacts will be profound, some analysts and consultants believe that trillions of dollars in economic activity will be influenced by our current generation of AI. To put that in perspective, in today’s terms, $1T is roughly equivalent to 1% of global GDP. And no one would diminish the impact that machine recognition of cancerous lesions will have on medicine. But if you search beyond that, there’s a much smaller potential in a much flatter portion of the AI application power curve, and as the same techniques applied in medicine are applied in the inspection, sorting and grading of eggs, potatoes and strawberries, the marginal impact of economic influence will rapidly decline.

The enthusiasm fueling today’s AI economic forecasts seems to all come from the pinnacle applications of the machine recognition power curve and it could well be argued that if machine recognition is a relatively small portion of the overall AI problem space, then we are in for a transformative revolution beyond our wildest dreams. But it could be equally argued that if today’s AI economic forecasts are viewed exclusively from the summit of AI’s current technological potential, there are a whole lot of potatoes that lie in the tedious, mundane flat expanse that lies just beyond it.

…meanwhile, the canary gets another whiff of gas

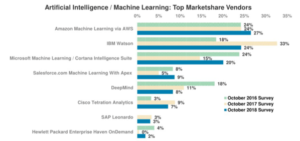

In the run-up to 3Q18 earnings, MarketWatch published a recent Alphawise/Morgan Stanley CIO survey on preferred AI vendors. Curiously enough, IBM experienced a sudden and sharp decline in preference, which caused MarketWatch to speculate about IBM’s impending Watson earnings.

Source: Alphawise/Morgan Stanley 3Q18 CIO survey courtesy of MarketWatch

And true to form IBM didn’t disappoint, declaring a 6.0 percent decline in 3Q18 revenues attributable to Cognitive and Software Solutions, by all accounts some of the hottest market segments on the planet. About a week after that, Forbes reported that Deborah DiSanzo, the head of IBM’s Watson Health, and ostensibly the leader of its AI business efforts was leaving the company. And a week after that IBM announces that it is buying RedHat, not so much a technology company as an aging insurance annuity, a business model near and dear to IBM’s heart (see HPE Builds a Boat in Its Basement – December 2016).

As stated back in July, when you compare explicit AI revenues to analyst forecasts, something just doesn’t add up. That is of course if you can obtain revenue statements about AI from any of the major players. Most of the major social and software players couch their AI results in vagaries such as improved “efficacy of existing operations” in things like search and security. And when it comes to hardware, unless you’re talking about an application specific hardware instance, changes in revenue are attributed to general market conditions such as holiday gaming releases and increased interest in bitcoin mining operations. And as for AI start-ups, there’s not much revenue to report but a boat load of valuations, paper proxies for potential future revenue that may or may not materialize.

Source: Tractica

A simple average of analysts AI revenue forecasts suggest that, as a technology market phenomena, it is poised to grow at a compound rate of approximately 37% y-o-y. That’s sell the house and bet the farm kind of stuff. IDC recently came out and said that AI attributable revenues would reach $77.6b in 2022 and if you extrapolate using their expected rate of growth would achieve about $200b by 2025. Analysts and consultants, the likes of Forrester, Gartner and McKinsey, have said that the near term horizon for business value, the one we are currently trans versing, would amount to trillions of dollars.

So where is it?

It hasn’t shown up in the financials of the Dow or the S&P. It hasn’t even shown up in the stratospheric valuations of the Nasdaq that are still priced to existing business models and existing business results. And it hasn’t shown up in conventional GDP analysis. Trillions of dollars in expanding GDP would, even now, start to make the radar. The EurekaHedge AI Hedge Fund Index is acting like a Watson tracking stock, down 4.6% for 2018. Google, the avowed leader in this space, says they have experienced about $170m of their quarterly revenue in “other bets” which includes a bunch of projects like Waymo and DeepMind that are classified as AI related.

Lost in a lot of the AI noise are the lessons that IBM has already learned. Leaving the MD Anderson fiasco aside, Ginni Rometty recently acknowledged that enterprise AI is hard stuff; it’s often unique, tough to distill and a slow slough to time-to-value (see AI: Crossing the Chasm One Clock Radio at a Time– August 2018) Current AI ML and DL techniques are fraught with their own issues including irreplicable results and irreducible biases, allowable in proof of concepts but unforgiveable in business and mission critical production systems, a lesson that IBM knows too well. (see AI: “What’s Reality but a Collective Hunch?” – November 2017 )

Lots of the brightest minds in AI are trying to eliminate these constraints while at the same time paving a way forward for AI’s future. In a recent paper entitled “Relational Inductive Biases, Deep learning and Graph Networks” AI experts from DeepMind; Google Brain; MIT and the University of Edinburgh proposed that a practical way out of ML and DL limitations and forward to a more generalizable AI would be the use of Graph Networks. A “graph” is a 3 tuple entity where each tuple informs and extends the constraints, inductive biases, under which it can operate in combinatorial computations. A “graph network” is a network of such entities under which combinatorial computation can be conducted.

A 3-tuple graph

Source: “Relational Inductive biases, deep learning, and graph networks”

What makes this approach interesting is that each node or graph on the network has its own internal set of metaphysics that it maintains while interacting with other nodes. Those of you familiar with the early development of the “semantic web” will remember the use of 3 tuple entities (RDF) as a key building block in the ontological and relational interaction of data (see Prophets on the SILK Road – October 2012).

Finally, there are a host of practical circumstances that don’t augur well for today’s AI enthusiasm. With respect to market projections, the implicit faith that AI is some homogeneous, contestable, global market is completely misplaced. Sovereign entities will begin to exert tight control over these technologies and the data they operate over. It is very unlikely that China will be buying any AI technology that hasn’t been perfected and controlled as Chinese intellectual property. Steal it? Sure. Buy it? Not very likely. To varying degrees the same will be true for US and EU markets. So when you begin to parse global markets by sovereignty, patents, regulations and applications, realizable market value may not be nearly as big as it might otherwise appear.

Privacy concerns could potentially pose another obstacle. Any instance of AI that consumes data on individuals is likely run into strict regulatory concerns. Not too long ago Google’s DeepMind inadvertently got cross wise with UK health authorities while innocently assisting in a project. Assuming the harmonization of privacy policies, as advocated by the likes of Apple, you can expect that explicit consent will have to be acquired prior to incorporating any personal data in AI analysis, not just health care information but any personal data.

And let’s not forget legal liabilities, a largely untested frontier of AI applications. Healthcare, life sciences, driver-less everything, finance, product design, the list is endless. So would the mere purchase of a cough syrup require a personal release of liability? That might slow things down a bit.

Recently, the current iteration of AI technology has been likened to the introduction of electricity and that it will soon permeate every facet of our lives. And to some extent that is already true. (see AI: The Next Cambrian Explosion – February 2016) The golden age of Edison, Bell and Westinghouse started in the 1800’s with the invention of the arc lamp in 1802 and the first electric motor in 1832. Only problem was that the first instance of publicly available electric power wasn’t until 1881 and even then it wasn’t at all ubiquitous. So, while infrastructure or other cultural prerequisites of adoption need not precede invention, it might well inhibit commercialization.

This isn’t to suggest that today’s technologies aren’t more easily or readily adopted, just that sometimes what happens on a table-top needs some time to find its way in the broader world.

Graphic courtesy of the National Hot Dog Association, all other images, statistics, citations, etc derived and included under fair use/royalty free provisions.

This is truly useful, thanks.