AI: Waking the Neuromorphic

Did you catch Intel’s big announcements at CES this year?

That’s right, touting its True VR Intel proclaimed 2018 will be the year that virtual reality finally takes off. Which just happened to be the big CES announcement in 2017 and the year before that and the year before that for about the last six or seven years.

Only kidding. The real big announcement was that Intel’s “speculative” chip vulnerability was easily corrected so long as you don’t mind persistent rebooting, combined with random data corruption, data loss and data “borrowing” in the form of surreptitious hacks.

Nahhhhh. Wasn’t that either. However, Intel did provide an important peek at our emerging future with respect to two “non-commercial” announcements.

The first was the announcement of a 49 Qubit quantum processor, one of three implementations, along with one from IBM and another from Google, that could potentially achieve “quantum supremacy”, the point where quantum computing prowess surpasses all conventional forms of computing. Truly amazing stuff but likely light years from practical commercialization and only then if you can grok electron spin and are a world class wizard with quantum coherence. Turns out, this is pretty slippery stuff. Doing meaningful, reliable computing at the quantum level requires an enormous amount quantum management such that it may take an order of magnitude of quantum computing overhead to extract the value from the most modest of quantum computing facilities. It might end up that you need a 1000 qubit supervisor to manage a 10 qubit processor; kind of brings new meaning to the notion of diminishing returns. So let’s put a post-it on this one for the time being and move on.

Next was the announcement of Loihi, a lonely, application-less processor, that could arguably be categorized as an early instance of a general purpose AI chip. Late to the party but a first of its kind. Again, not a commercial release but a research release destined for university labs but built using commercial grade circuit size and methods. What makes Loihi noteworthy is that it is a general purpose instance of neuromorphic computing, one in a growing field of candidates, not even the only one from Intel, in the search for information technology’s most promising holy grail, biologically inspired, brain-based computing, the basis for general, extendable, autonomous artificial intelligence. Next to quantum computing, this is the Big Kahuna of techdom. A prize that has been pursued ever since Carver Mead first proposed it back in 1990.

And it’s the Loihi announcement, as mundane and overshadowed as it was, that is the one that bears watching. This won’t be because Loihi is going to prove to be an overnight success. Not at all. It might only be a stepping stone. Likely, the first of several iterations. However, with the eventual addition of useful programming capabilities, a widely adopted general purpose neuromorphic chip will easily transfix the pilgrims who head to Las Vegas every January and soon replace virtual reality as the jewel in the CES crown for the next 8-10 years. But as enthusiasts of the neuromorphic genre are often reminded, if you subscribe to the notion that good things come when technology recapitulates biology, then you need grit as well as nacre if you want to make a pearl.

And it’s the Loihi announcement, as mundane and overshadowed as it was, that is the one that bears watching. This won’t be because Loihi is going to prove to be an overnight success. Not at all. It might only be a stepping stone. Likely, the first of several iterations. However, with the eventual addition of useful programming capabilities, a widely adopted general purpose neuromorphic chip will easily transfix the pilgrims who head to Las Vegas every January and soon replace virtual reality as the jewel in the CES crown for the next 8-10 years. But as enthusiasts of the neuromorphic genre are often reminded, if you subscribe to the notion that good things come when technology recapitulates biology, then you need grit as well as nacre if you want to make a pearl.

Why Here? Why Now?

To those of you new to the neuromorphic computing, and that’s just about everyone, up until a few years ago it was considered a kind of computing backwater; the closest it ever came to being real was science fiction. The promise, however, of a neuromorphic break-through, a brain-based computer processor, has remained uniquely tantalizing and for a host of practical reasons. The capabilities of today’s largest conventional computers come nowhere near to that of the human brain. In part, this is due to the fact that our brains are designed to function analogically, not digitally. Neurological activities don’t operate in the black and white world of ones and zeros. Instead, every neuron listens to its neighbors and each neighbor has a slightly different take on what it believes is going on. So when asked to opine, each neuron “weighs” the voices that it hears and then lends its own. Weighing and producing a neurological voice, or what AI types call vectors, is an analogic activity, not composed of the certainty of bits and bytes but rather the subtle voltages of cascading shades of synaptic gray. Our brains simultaneously ingest, interpret, posit and resolve a cacophony of stimulation, distilling it into nuances and differences, observations and sensations, blossoms and blooms of networked signals, murmurations made of billions of firing neurons, whispering to each other carefully crafted conjectures, that finally coalesce in a wave of memorialized conclusions, thoughts, hopes and memories.

Don’t believe me? Have a look.

As analogue processors, our brains are designed to simultaneously handle enormous amounts of signal and noise, which appear to be present at the beginning of every neurological activity. And it is by virtue of the network architecture and sheer weight of neural response that we are able to facilely sort through ambiguity and “stochastically converge” on the familiar, articulating both our world as we know it as well as how we respond to it. At the same time we are only beginning to understand the role that neuro-outliers, fanciful phantoms present in every neurological process, play in the life of thoughts and imagination. The da Vinci’s and Einstein’s of this world succeed in part because they cultivate the outliers and harvest the noise of human thought, the possible but not yet present, the denizens of the synesthetic, the capacity to conceive, create, imagine and dream. And it all runs on about 20 watts of power, less than a conventional penlight.

Back when neuromorphic computing was first proposed, it was already recognized that existing, conventional, von Neumann computing architectures, those in use today, would eventually create their own bottleneck. Moving data and instructions in and out of specialized components, cpu, memory, storage, would cause things to get gummed up and the required orchestration would act as a governor on future performance improvements. Bear in mind that this same bottleneck would haunt any component based architecture including those that might be neuro-inspired.

However, current thinking is that a brain-based architecture would be fundamentally different. For starters the brain, for the most part, is made up of a huge amount of a single, simple component, neurons, and the connections that go between them, synapses. Nearly 100 billion neurons and 1 quadrillion synapses. So, instead of being composed of specialized functionality, separated and concentrated into uniquely designed single purpose components, orchestrated by an operating system and a bus, brains are mostly homogenous scads of connected neurons with a degree of self organizing specialization based on sensory, motor or interneuron activities.

Throughout the 1990’s there was a growing conviction that the most promising neuro-inspired computing would be accomplished through hardware, not software, implementations. So there were numerous attempts at building ASIC (Application Specific Integrated Circuits) platforms for artificial neural networks. Most of them went nowhere. Then along about 2006, at roughly the same time, numerous AI research teams reached a similar conclusion, Field Programable Gate Arrays (FPGA), relatively easy to configure, replicate and marshal into parallel orientation, seemed ideally suited to construct simple convolutional, feed-forward neural networks, the kind most often used in pattern recognition like object, handwriting and voice recognition. And suddenly things began to take off.

Then in 2013, some folks in Europe came up with the idea of creating something called the Human Brain Project, a pure research initiative that sought to simultaneous advance the understanding of the brain, something computer types sometimes referred to as wetware, while at the same time better understanding what, if any, technological implementation could approximate its astonishing capabilities. Bolstering this initiative was the development and creation of a first generation of nuero-inspired computing facilities such as IBM’s TrueNorth and the University of Manchester’s SpiNNaker. It wasn’t until the adoption of Nvidia’s GPU’s and the announcement of Google’s Tensor machine in 2016 that the rest of the computing industry began to acknowledge the commercial implications of neuro-inspired computing. By the time 2017 rolled around the New York Times counted some 45 neuro-based chip start-ups taking in approximately $1.5B in investment not counting those clandestine entities bankrolled by sovereign sources.

News From the Frontier

The recent rush of VC money into neuromorphic computing surely signals the beginning of a new phase for this technology, one that would logically include aggressive commercialization. But a quick survey of some of the existing challenges would seem to suggest that we have not yet reached a tipping point, the moment where pure research ends and practical engineering begins. For starters, the number of qualified experts in AI and neuro-computing is probably nowhere near the number needed to begin an informed coalescence and winnowing of experimental initiatives (see Artificial Intelligence – November 2017). MIT, an institution that is a founding member of the very concept of AI, only just this month announced the formation of MIT Intelligence Quest, an organization whose mission is to explore the very implications of neuromorphic intelligence. Equally noteworthy is the obvious omission from public scrutiny of research initiatives conducted under the auspices of foreign governments. CB Insights just reported that China funds 48% the largest share of current global AI research with an emphasis on facial recognition and hardware technologies. And Sinovation Ventures just published a report “China Embraces AI: A Close Look at a Long View” outlining China’s declaration to become a leader in AI’s fourth and perhaps final wave, autonomous intelligence. And it appears that China’s native AI research and patent activity only seem to be gathering momentum.

A quick look around the neuromorphic landscape seems to paint the picture of a relatively nascent industry, confident in its destiny but still wanting in a number of critical details. The fact that it is suddenly besotted with tons of VC money probably has more to do with the money than the science that’s involved. If a couple of key considerations don’t coalesce in the current generation of neuro-inspired devices, a whole bunch of VC’s could wake up with a serious timing issue on their hands. In too soon, with too much and out too early.

At the component and materials level, more options are coming forward than are falling from consideration. Only recently have materials and devices such as Atomic Switches, Spintronics, Phase Change Memory, Carbon Nanotubes and Opitcial made it onto the field for consideration. This is due in part to the propensity of certain materials to behave more or  less or better than their biological counter parts. For instance, materials that promote spike-timing dependent plasticity are currently viewed as biologically inspired and esteemed for their fidelity with respect to subtle changes to the character of synaptic inputs and neuron transmission. Based on this attribute alone, a preponderance of research has begun to be concentrated in memristor materials and devices (see HPE Builds a Boat in its Basement – December 2016). Several independent studies seem to confirm the unique ability of memristive materials to capture, preserve and change the synaptic weights and conductive states of neuro-inspired devices. Recently, devices with multiple memristive synapses have demonstrated faster and more accurate convergence when incorporated in deep learning networks.

less or better than their biological counter parts. For instance, materials that promote spike-timing dependent plasticity are currently viewed as biologically inspired and esteemed for their fidelity with respect to subtle changes to the character of synaptic inputs and neuron transmission. Based on this attribute alone, a preponderance of research has begun to be concentrated in memristor materials and devices (see HPE Builds a Boat in its Basement – December 2016). Several independent studies seem to confirm the unique ability of memristive materials to capture, preserve and change the synaptic weights and conductive states of neuro-inspired devices. Recently, devices with multiple memristive synapses have demonstrated faster and more accurate convergence when incorporated in deep learning networks.

At the network and architecture level, hardly any cognitive function other than object recognition has been thoroughly explored. Convolutional, feed-forward, supervised networks (aka deep learning) have exhibited potential in “sensing” capacities such as vision and auditory systems but so far similar capabilities have not been proven for things like somatosensory (touch), conceptualization, inference or reasoning. Even Geoffrey Hinton, the father of deep learning, has expressed his reservation about the future of this technique and has trained his efforts in a new direction. It now appears that there is more than a remote possibility that deep learning is a first generation AI technique and one that might be superseded relatively quickly.

Some of the concerns propelling new research into network topologies is the notion of supervised vs unsupervised learning, a critical consideration when it comes to the autonomy of any future neuro-based device. Currently, deep learning is a highly supervised activity well

suited to tunable, feed-forward networks, not necessarily an ideal circumstance for neuromorphic devices that require high degrees of independence and autonomy. Self-learning, unsupervised devices will likely require recursive Hebbian or Kohonen networks which have yet to be deeply explored.

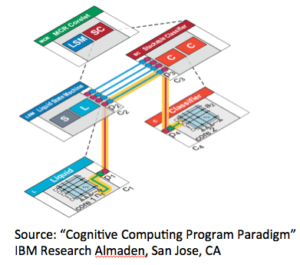

Another consideration is that software programming for neuro-computers practically doesn’t exist. There is a fundamental recognition that something will be needed to orchestrate the interaction of cognitive functions particularly as it pertains to synaptic junctions but exactly what that might be has not been agreed to. An early instance of this and one that helps define the issue is the Corelets programming framework devised by IBM for use with the TrueNorth architecture. Elegantly abstracted and articulated using an object-oriented paradigm, Corelets actually get the data into and out of neuron cores and synaptic junctures without having to conform each piece of data to the neurological model specified by the hardware. If every new neuro-contraption requires its own unique programming framework, and it appears that they might, then it is going to slow the coalescence of discovery down  considerably. This issue has been raised by a number of AI researchers who are suggesting that neuro-inspired code continue to be developed in von Neumann frameworks until such time as a uniform neurological programming framework can be practically achieved. Further, we are not yet to a point where it is a forgone conclusion that a complete neurologically functioning computer will be possible through a uniform general purpose architecture and not the piece meal integration of discrete, specialized components. One with a discrete model for sensory assimilation, and unique model for inference and reasoning. And pretty soon we are right back at the von Neumann bottleneck.

considerably. This issue has been raised by a number of AI researchers who are suggesting that neuro-inspired code continue to be developed in von Neumann frameworks until such time as a uniform neurological programming framework can be practically achieved. Further, we are not yet to a point where it is a forgone conclusion that a complete neurologically functioning computer will be possible through a uniform general purpose architecture and not the piece meal integration of discrete, specialized components. One with a discrete model for sensory assimilation, and unique model for inference and reasoning. And pretty soon we are right back at the von Neumann bottleneck.

Curiously, for all the time and money that has been lavished on advancing neuromorphic computing one critical element has largely gone missing: What role does sleep play in biologically based cognition and how does that factor into current efforts to emulate intelligence in neuromorphic chips and architectures? For a large generalized portion of the brain, sleep, and the slow motion waves it creates, appears to clear and reset the brain’s working registers, creating a new synaptic baseline for the consumption of waking experience, while at the same time inculcating recently acquired memories and concatenating them to those already preserved. Simply pushing the reset button on a neuromorphic device should be no big deal. But that’s not the role that sleep seems to play in preserving and promoting neurological functionality. The closer neuro-inspired devices come to brain-like functioning, the more they will likely need to incorporate brain-like maintenance functions like sleep which to date haven’t really been explored.

A first rate explication of the existing state of neuro-computing, “A Survey of Neuromorphic Computing and Neural Networks in Hardware” has been authored by Catherine D. Schuman, Thomas E. Potok, Robert M. Patton, J. Douglas Birdwell, Mark E. Dean, Garret S. Rose and James S. Planck. And anyone who wants to understand the current state of neuromorphic-computing should find themselves a copy of this and read it.

Give us a minute, we’ll think of something…

Given the obvious gaps and apparent lack of maturity in this technology, it would be reasonable to ask why the sudden rush of investment? VC’s are notorious for two things 1) throwing nickels around like they’re sewer lids and 2) stampeding down main street like scared steers in B rated cowboy movies from the 1950’s. And when it comes to all things AI and increasingly neuro-computing we have definitely hit a running of the bulls moment. Usually this only happens under winner take all or winner take most conditions. And it would seem that this consensus has already been reached in the VC community. However, if you have a look at China’s level of investment, it would appear that we are facing something completely different; not necessarily a winner take all deal but rather a winner take everything deal. And everything would include intellectual property rights, fabrication rights, distribution rights, need to know rights and the shirt off your back. And depending upon exactly where we are in the pendulum of individual versus state-based sovereignty, human rights as well.

To assume that bourgeoning venture interest in neuromorphic computing sets it on the verge of wide spread commercial adoption would be to over state the case. The first issue one would need to resolve is adoption as what? The attributes that make neuromorphic chips enticing, human-like cognition, mobility, autonomy, low power consumption, are the same ones driving the edge computing arguments. But once you get past the obvious military applications and driverless cars, then what? Killer satellites? Asteroid mining robots? Stuffing brain-like IQ into backyard, bar-b-que drones is basically a waste of neurons.

For the moment, even most marketing analysts can’t figure out exactly what market neuromorphic computing actually addresses. Some suggest that it will remain little more than a market for academic researchers for the next several years. Others maintain that neuromorphic computing is all about speeds and feeds and fold it into conventional High Performance Computing segments which by rights belongs more to the realm of quantum computing than to untethered, free-range neuro-contraptions roaming around the planet at will. Current market estimates range from a paltry hundreds of millions to low single digit billions over the next several years.

A part from the “adoption as what” issue that’s giving forecasters pause is the learning and innovation cycle baked in to designing and fabricating new hardware. A single iteration of a chip takes about two years which means that the spawn of Loihi won’t get to the foundry until 2020. Assuming we’re two spins away from a final commercial release we are talking 2022 at the earliest. So the winner here is going to have to have some patience and deep pockets.

While many would rather not come to terms with this, when it comes to neuromorphic computing one question looms larger than all the rest:

Why create a peer-like intelligence if you don’t intend to treat it like a peer?

Which would seem to suggest that there are about to be lots of new friends in our future. After all, deep down, we all know that people are vastly overrated, so why limit our relationships to only humans, a concept that Hollywood and the computer industry has been foisting on us for at least the last seven years. Bit by bit, we’ve grown to accept the notion that talking to disembodied intelligence is as natural, if not as satisfying, as talking to oneself. Embodying that intelligence into a mobile physical presence, say a Boston Dynamics robot or Spotmini, or a portable holographic phantom or your favorite augmented reality emojicon visible in your Magic Leap glasses and connected to a ubiquitous field of sensory dust isn’t a really big stretch.

However, if new friends aren’t necessarily your thing, you might enjoy the opportunity first suggested by Ray Kurzweil (see Spooky Action at a Distance – February 2011) to slough off this mortal coil and domicile your own intellect in some freshly minted neuro-contraption, becoming a valued “companion” to your very own family and friends so long as they deign to keep you around. Maybe they’ve already reserved a place for you on the mantle.

So, there’s a pretty substantial chance that there will be a new “companion” in your future; one whose persona, wit, curiosity, sarcasm and humor you can craft and cultivate, as well as one that can keep a watchful eye on you to secure your health and well being, you know, kind of like a caring relative or neighbor or community or government. It is beginning to appear that the Chinese have already figured this part out.

When you look at today’s commercial instances of AI you find that they are not actually built on neuro-inspired hardware or for that matter neuro-inspired processes. For instance, Google’s Tensor and IBM’s Watson run on top of conventional processor architectures. The Jeopardy version of Watson was actually running SuSe Linux. In fact, most of today’s AI deep learning capabilities, run on commercial hardware and rely on a supervised learning technique called back-prop, something that, as far as we can tell, our brains don’t actually employ (see AI: What’s reality but a collective hunch? – November 2017).

And this is an important consideration.

As we plumb the mechanics of our own intelligence we could well discover that beyond our ken lies a frontier of intelligence currently unimaginable but eminently discoverable and reproducible. And then what? The closer we get to the neuromorphic the greater the likelihood that societal norms will lose their relevance. What’s to keep a person from willing their property to their dearest neuromorphic “companion”? Or to keep that “companion” from owning property or paying taxes. And based on those taxes from casting a vote? Or with that inheritance to acquire other “companions”. Or for like-minded “companions” to create a community made up solely of future “companions”? I understand Vermont’s lovely this time of year.

If you sum-up and project the neuromorphic vectors already in-play, sometime in the next 10 years our species will enter a new epoch, one of our own making, but not one we alone will occupy or control. By then it most likely won’t be called the Neuromorphic Age as the devices so invented will have already re-invented themselves. So what would a superior, autonomous intelligence decide to call its moment?

Probably whatever the hell it wants to.

Graphic courtesy of Michelangelo di Lodovico Buonarroti Simoni, fragment, “The Creation of Adam”, Sistine Chappell, all other images, statistics, illustrations and citations, etc. derived and included under fair use/royalty free provisions.

I enjoy the article